First Electronic Computer

After Babbage’s time, mechanical calculators became increasingly complex, in particular under the ownership of a named company International Business Machines, created in 1911, and better known as IBM. However, it was during the Second World War that the first completely electronic computer would appear. It made 5000 additions per seconds! It was called the ENIAC. ENIAC was developed and built by the U.S. Army for their Ballistics Research Laboratory. It was turned on in 1947 and was in continuous operation until 11:45 PM on October 2, 1955. ENIAC used ten-position ring counters to store digits. Arithmetic was performed by "counting" pulses with the ring counters and generating carry pulses if the counter "wrapped around." The idea was to emulate in electronics the operation of the digit wheels of a mechanical adding machine.

|

Photo courtesy U. S. Army ENIAC, short for Electronic Numerical Integrator And Computer, was the first all-electronic computer. |

Following the dawn in the 1950s of the first computers, like the ENIAC, experimental discoveries showed that transistors perform the same functions as vacuum tubes.

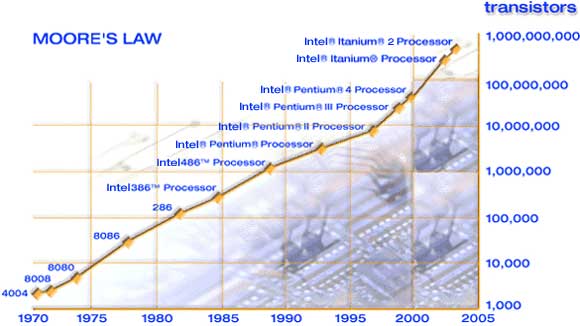

In 1979, the INTEL 8088 microprocessor (or integrated circuit), could carry out 300,000 operations per second. In 2000, the Pentium 4, also manufactured by INTEL, carried out about 1,700,000,000 operations per second, which is 6000 times more! This exponential growth in the number of transistors per integrated circuit functions according to Moore’s Law. In 1965, just four years after the first planar integrated circuit was discovered, Gordon Moore observed that the number of transistors per integrated circuit would double every couple of years. Credit: Courtesy of Intel Corporation In 2004, a chip of silicon measuring 0.02 inches (0.5 mm) square held the same capacity as the ENIAC, which took up a large room.

|

Image courtesy of Intel Corporation |

|

This is a portion of a semi-conductor device showing several printed circuits. Each color represents a different material. |

Back in 1943, when Thomas Watson, chairman of IBM said, "I think there is a world market for maybe five computers," he had no clue that his own company would be a key player in making computers ubiquitous in our everyday lives. Computers are getting faster and smaller and proliferating with astonishing speed everywhere in the world. Life would not function as we know it today without the computer chip. Only a half century after its invention, you will find one in everything requiring speed and complexity, from a DVD player to a jet fighter.

A computer chip can run the electrical supply network of a city. It can also run the microwave you use to heat up a snack, or the cell phone you use to connect with friends and family. Indeed, many scholars believe that one of mankind’s most significant inventions is the computer.

This content has been re-published with permission from SEED. Copyright © 2024 Schlumberger Excellence in Education Development (SEED), Inc.